In the upscaling article, I talked about some of my LoRAs producing too many figures in a piece. I used that as the entire excuse to discuss how to make the image just bigger. But what about when you don’t want to change the figure’s size and just want to add more background? Well, doesn’t this whole application draw stuff? Can’t we just ask the machine to draw more image around the base image? The answer is Yes, we can. It’s not as straightforward as resizing, because we want the new background to fit with the existing background. Adding more image around the main image is known as “Outpainting”, a term coined by the people who make Dall-E, and picked up by other image manipulation software. It is a play off the older term “Inpainting” where you have the system redraw a portion of the existing image. Since the elements used for both of these techniques overlap greatly, I’ll go into them both.

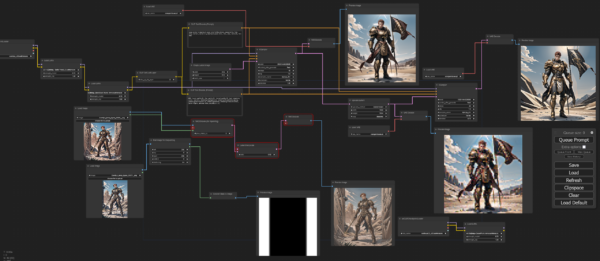

The workflow for this article is going to get more complicated than any other than the multi-combo upscaling testing, and this time, the disconnected nodes off the bottom will get drawn into the flow later on. To start with, I’ve set up the basic upscaling setup from article 3, but I’ve decided to run the model line from the original checkpoint and its LoRAs. This is mainly to avoid clutter, as when you have multiple LoRAs, it just starts to take up more space, and possibly memory. I didn’t intend to change checkpoints or LoRAs from the set I’m applying. So what am I using? One designed to add a “Battlepriest” aesthetic which also produces high detail in a digital painting style, and one designed to give armor a black and gold marbled material.

The prompt for this step is “space marine in desertpunk power armor holding banner, space marine, 1man, solo, red hair, banner:1.5, desertpunk power armor, 1man, solo, loincloth,” Initially, when I took the picture for the workflow, I’d included the “Full body” keyword, then realized I wanted to try to draw more of the central subject, so I needed him to not be fully in the frame. I do note that neither the original nor upscaled version is actually holding the banner, it’s just sitting in the background. That is a perfect inpainting target to change some details. So we’re going to outpaint by expanding the smaller image with more background, and inpaint on the big one and try to get the guy to hold the banner instead of just stand in front of it.

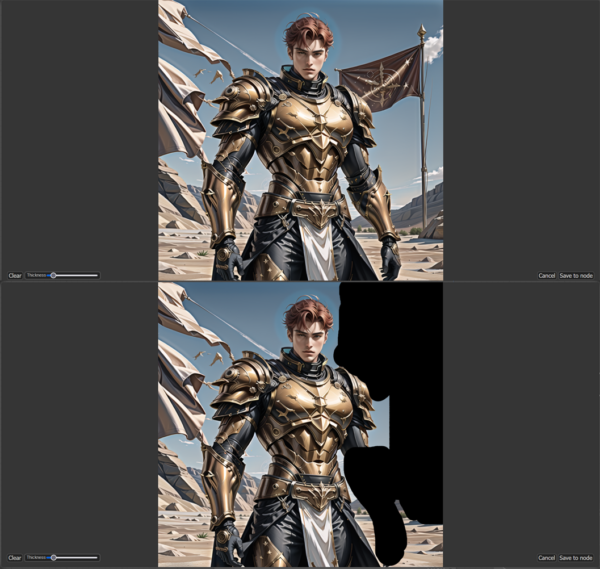

Inpainting is simpler, so we’ll start there. So what do we need to do? First off, we have to detach the upscaling section of the workflow. We do this by deleting the latent line from the first sampler to the Upscale Latent node. This removes everything on the right hand side from the loop. We also need an input that is an existing image file, that’s a “Load Image” node in the lower left. Load Image produces two outputs – “Image” and “Mask”, if all we do is load, the mask output is basically empty. So what are these? The “Image” is simple, it’s what was loaded from the file that we haven’t selected yet. So, we’ll load the upscaled image. We now have to create an image mask so that the software knows what we want it to try to redraw. This is also done from the load node. By right-clicking on it, we get a menu which includes an option “Open in Mask Editor”.

The screenshot didn’t pick up the mouse pointer, but over the image it has a dashed circle around the arrow point. There are four controls on the mask editor screen: “Clear” which removes whatever mask you’ve painted; “Thickness” which controls the size of the brush used to paint the mask over the image; “Cancel” just aborts whatever we’ve done; “Save to Node” applies the mask to the Load Image node to be passed as output. So I’m going to paint the flag and the guy’s left arm. I want him to be holding a banner.

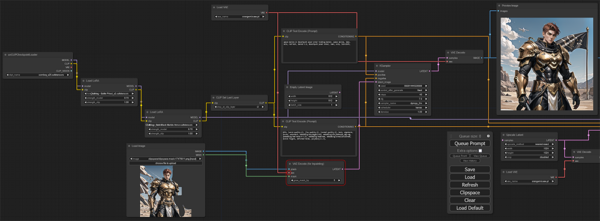

Now that we’ve not an image and a mask – what do we do with it? We need to get something into the latent channel So we feed it into a new node “VAE Encode (for Inpainting)”. There is another VAE Encode node, that takes and image and makes a latent, but the inpainting version also take a mask input to merge the two, making it the ideal node for this step. The only input it takes that doesn’t come off the load image node is a VAE line. We’ll use the same VAE Loader that we used for the decode, giving it the old reliable orangemix. Lastly, we’ve got a single output from the encode node – a latent. We connect that to our sampler, replacing the one from the Empty Latent Image node.

Before I kick anything off, the workflow now looks like this:

Lets kick it off…

That doesn’t look like what I wanted. I did not change either the prompt or the seed, but that big bunker-like rocky thingy and weird armor extensions were not what I was hoping for. Time to start randomizing the seed and trying again. If I don’t see anything good coming out, I’ll have to refine the prompt or fiddle with the denoising setting. I got a bunch of wonky results, so I decided to start by adjusting down the denoise value. This erased my banner outright, but was otherwise sane. So I fiddled with denoise a bit more, eventually getting some sort of mace-shaped tower in place of the banner.

While an interesting image, I don’t have my held banner. Lets adjust the prompt to increase the weight of banner and add something to emphasize held banner. After a few rounds of prompt adjustments, running random seeds, and tweaking the denoise setting, I can’t seem to get the arm to be holding a darn thing. Sounds like a job for a LoRA.

To the Internet!

…

And the internet has failed me. For the sake of getting this article done, I’m going to concede defeat on the objective. I want to move on to outpainting, but I’m already at article length, and outpainting is still a messy process. So I’m going to push that off to the next article.

I’ll leave you with the last image that came out of the engine. At least, it’s got a lot of flags.

I should have made more of a cliffhanger ending to the article to mention that there is a revisit of this topic later…

People can look forward to me Firsting the shit out of that.

What’s up with the background around his head?

I don’t know.

For whatever reason each round of inpainting messes with the brightness of the image and you end up with these auras and haloing. Even when it isn’t supposed to make any changes in those areas.

Plus some of the checkpoints don’t fill sky well.

There are a lot of interesting aspects to this. I’m seeing it as converting verbal language into code, and then into a visual. And just like converting energy from one form to another, there will be losses and you have to figure out how to get around that. There was a discussion last week about people who don’t have an inner dialog, and during that we found sometimes people think in images instead of words when they are doing something creative.

And of course you first have to convert the imagined(?)/desired image into language before you turn the language into code, using the “grammar” or syntax that the program understands so it will, ideally, use the code to create an image that at least half-ass resembles the image you had in mind in the first place.

Sometimes the hardest part of learning any software application is figuring out what it calls a specific concept for which YOU have a completely different term.

Yes exactly. We have a common verbal language, and that’s not even 100% agreed upon. But outside a few groups like similar artists or film makers, there is nothing close to a common visual language.

Welcome to the world of IT. As an example, nearly every phone system does the same thing (direct calls to the appropriate person/team), but they all have different terms for every step of the process. The first thing about getting up to speed on a new system is mapping the old terms to the new.

I find the lack of “Wounded Knee by Pixar” or “18th Century engraving of a Pokemon Battle ” prompts disappointing.

Does not compute.

From the ded-thred re: Lara Logan. She’s dumber than most blondes. I really like the I-94 corridor, connecting the whole east coast.

Hey, 94, 95, whatever it takes.

🙂

Her husband was in military intelligence and allegedly still has a lot of contacts in the intelligence community, which is where she says she gets her info. That said, I’m not buying it, and she should have checked a map.

Or at least be smart enough to understand that even numbered highways go east-west and odd numbered go north-south.

/looks at the 270 loop

Loops don’t go anywhere.

Love to see her face when you explain that. Also, it wasn’t the primary interstate, but a beltway – with a whole [western] loop, intact.

As always, the Two Questions apply:

Who wants me to believe this? Agency spooks, apparently.

Why do they want me to believe it? Narrativing an accident into an attack can serve any number of ends, none of them good.

Was reading some “analysis” yesterday predicting that Baltimore’s harbor will be shut down for 3 or 4 years with catastrophic consequences for the supply chain and U.S. economy. I’m betting the port is reopened on or before June 1st. A new bridge might take 4 years but the ship channel will be opened quickly.

I think that “analysis” was the tin-foil piece JI responded to yesterday.

Speaking of scifi/fantasy authors,

This lady https://twitter.com/jk_rowling/status/1775143520602329505

Made the Scottish MiniLuv blink.

https://www.bbc.com/news/uk-scotland-68712471

It’s encouraging to see some smart and successful people getting jacked by the censorship regime and coming out swinging.

She’s a billionaire. She’ll be fine until some prosecutor in England decides that she overstated the value of her property and then commences with the lawfare.

Wonder how long before she decides to move to Monaco or Luxembourg?

People with fuck you money who aren’t taking this shit has been a huge blessing and possibly our only hope.

Fuck you money isn’t enough anymore.

I would like the opportunity to disprove that assertion…

Adam Carolla has a distinction between Fuck You money and Fuck Me money (ie. Elon).

Without advance planning, Fuck You Money just means you have more to lose.

Few things in the political realm recently have made me happier than Trump making billions on Truth Social after James tried to break him.

Been meaning to post these. Still planning to try and order some custom transfer sheets for a space dwarf miniature army. I made a simple jpg of the Glib logo that should be one option. https://ibb.co/YBzng3C

The more complicated option is trying to do a “simplified” variation of the Gadsden flag. It’s hard to dig up something stylized. I came up with a couple of these – because I think they kinda represent the snek plus a space theme – but they may be too simple. Open to links/inputs/suggestions/etc.

https://ibb.co/ZHtsKGS

or

https://ibb.co/TMrm17w

I’m not getting the snake from those.

If you hadn’t mentioned it, I’d have never identified the inspiration

Why do we remind you of dwarfs?

Miniatures and stuff.. Timing when I’m actually logged in and have access to my pics. Any recommended Gadsden themed logos are highly appreciated.

As a person who enjoys making art by hand (not a fan of making digital art, AI or otherwise), I wonder where this will all lead? I could see it used for web site and book illustrations, product packaging, and eventually deep fake everything in the news. Will we ever see this on someone’s wall?

Some people are already trying to sell it.

Personally, I hate these people.

I wouldn’t be surprised to find some already hanging on walls or in galleries. There’s already been people complaining about some shows/movies using LLM’s to do art/audio for portions of them.

Facebook is being flooded with AI generated art as click bait. Sponsored content is now leading with too-good-to-be-true photos.

Some of it is just weird and freaky. What comes to mind is the pics of people with abs so defined they look their bellies have been skinned.

Who clicks on that shit?

I dabbled in digital art some years back – just enough to create a band logo and cobble together gig posters for quite a few years (all pretty much slight variations on the same theme.) Adobe Illustrator in particular was nothing at all like drawing or painting in real life. I admire what digital artists can do with the medium, but I don’t know whether I’ll ever try to delve deeper.

It helps that one of my favorite artists came from a graphic design background: https://www.charleyharper.com/

Trying to explain a project to a subordinate.

Employee is too used to quick turnaround tickets.

No, I can’t tell you exactly what you need to add, that’s part of the project, you have to figure it out. It’s hiding in here because XYZ, and you won’t find it in any of the active environments because it wasn’t used there before. Data collection is part of the task.

But I’m not sure how to be clearer about how to find the data.

Fuck work.

I’m going to go play with an air compressor for a while. Might even attach it to an airbrush if it seems safe.

Compressor works, figured out how to adjust the regulator pressure independant of the tank pressure, found the “drain tank immediately” valve (It was open for shipping so I had to or it wouldn’t work). Connection between regulator and moisture trap is sound. Connection between moisture trap and hose makes a hissing sound…

😐

I could put some of that sealant tape on the threads, but I have a different set of 1/4″ to 1/8″ adapters coming which will let me get away with fewer linkages than what I’ve got, so I’m going to wait and let it just lose pressure.

Pros – the tank means the airbrush can run quietly at a stable pressure for a long time.

Cons – the compressor itself is LOUD! so when it is running, it’s annoying.

Admittedly it is intended for power tools, so the acceptable volume levels were higher for the designers.

Coworker thinks it’s ok to stop by my office after he gets done having a cigarette.

Dude, you stink.

Smokers have no idea how bad they smell.

don’t care how bad they smell

If I have to choose, I’ll take a smoker over a bathing-optional person any day. And I’ve run into more of the latter than the former in recent years.